Publications

2024

- CLiC-it 2024

To Click it or not to Click it: An Italian Dataset for Neutralising Clickbait HeadlinesDaniel Russo, Oscar Araque, and Marco GueriniDec 2024

To Click it or not to Click it: An Italian Dataset for Neutralising Clickbait HeadlinesDaniel Russo, Oscar Araque, and Marco GueriniDec 2024Clickbait is a common technique aimed at attracting a reader’s attention, although it can result in inaccuracies and lead to misinformation. This work explores the role of current Natural Language Processing methods to reduce its negative impact. To do so, a novel Italian dataset is generated, containing manual annotations for classification, spoiling, and neutralisation of clickbait. Besides, several experimental evaluations are performed, assessing the performance of current language models. On the one hand, we evaluate the performance in the task of clickbait detection in a multilingual setting, showing that augmenting the data with English instances largely improves overall performance. On the other hand, the generation tasks of clickbait spoiling and neutralisation are explored. The latter is a novel task, designed to increase the informativeness of a headline, thus removing the information gap. This work opens a new research avenue that has been largely uncharted in the Italian language.

@article{russo-etal-2024-click, title = {To Click it or not to Click it: An Italian Dataset for Neutralising Clickbait Headlines}, author = {Russo, Daniel and Araque, Oscar and Guerini, Marco}, month = dec, year = {2024}, booktitle = {Proceedings of the Tenth Italian Conference on Computational Linguistics}, url = {https://ceur-ws.org/Vol-3878/90_main_long.pdf}, } - ArXivFace the Facts! Evaluating RAG-based Fact-checking Pipelines in Realistic SettingsDaniel Russo, Stefano Menini, Jacopo Staiano, and Marco GueriniarXiv preprint arXiv:2412.15189, Dec 2024

2023

- PoliticIT at EVALITA 2023: Overview of the Political Ideology Detection in Italian Texts TaskDaniel Russo, Salud María Jiménez-Zafra, José Antonio García-Díaz, Tommaso Caselli, Marco Guerini, Luis Alfonso Ureña López, and Rafael Valencia-GarcíaIn International Workshop on Evaluation of Natural Language and Speech Tools for Italian , Dec 2023

This paper presents the PoliticIT 2023 shared task, organised at EVALITA 2023 workshop. The task aims to extract politicians’ ideology information from a set of tweets in Italian framed as a binary and a multiclass classification. The task is designed to be privacy-preserving and it is accompanied by a subtask targeting the identification of self-assigned gender as a demographic trait. The PoliticIT task attracted 7 teams that registered for the task, submitted results and presented working notes describing their systems. Most of the teams proposed transformer-based approaches, while some of them also used traditional machine learning algorithms or even a combination of both.

@inproceedings{Russo2023PoliticIT, title = {PoliticIT at EVALITA 2023: Overview of the Political Ideology Detection in Italian Texts Task}, author = {Russo, Daniel and Jim{\'e}nez-Zafra, Salud Mar{\'i}a and Garc{\'i}a-D{\'i}az, Jos{\'e} Antonio and Caselli, Tommaso and Guerini, Marco and L{\'o}pez, Luis Alfonso Ure{\~n}a and Valencia-Garc{\'i}a, Rafael}, booktitle = {International Workshop on Evaluation of Natural Language and Speech Tools for Italian}, year = {2023}, url = {https://ceur-ws.org/Vol-3473/paper7.pdf}, } - Benchmarking the Generation of Fact Checking ExplanationsDaniel Russo, Serra Sinem Tekiroğlu, and Marco GueriniTransactions of the Association for Computational Linguistics, Dec 2023

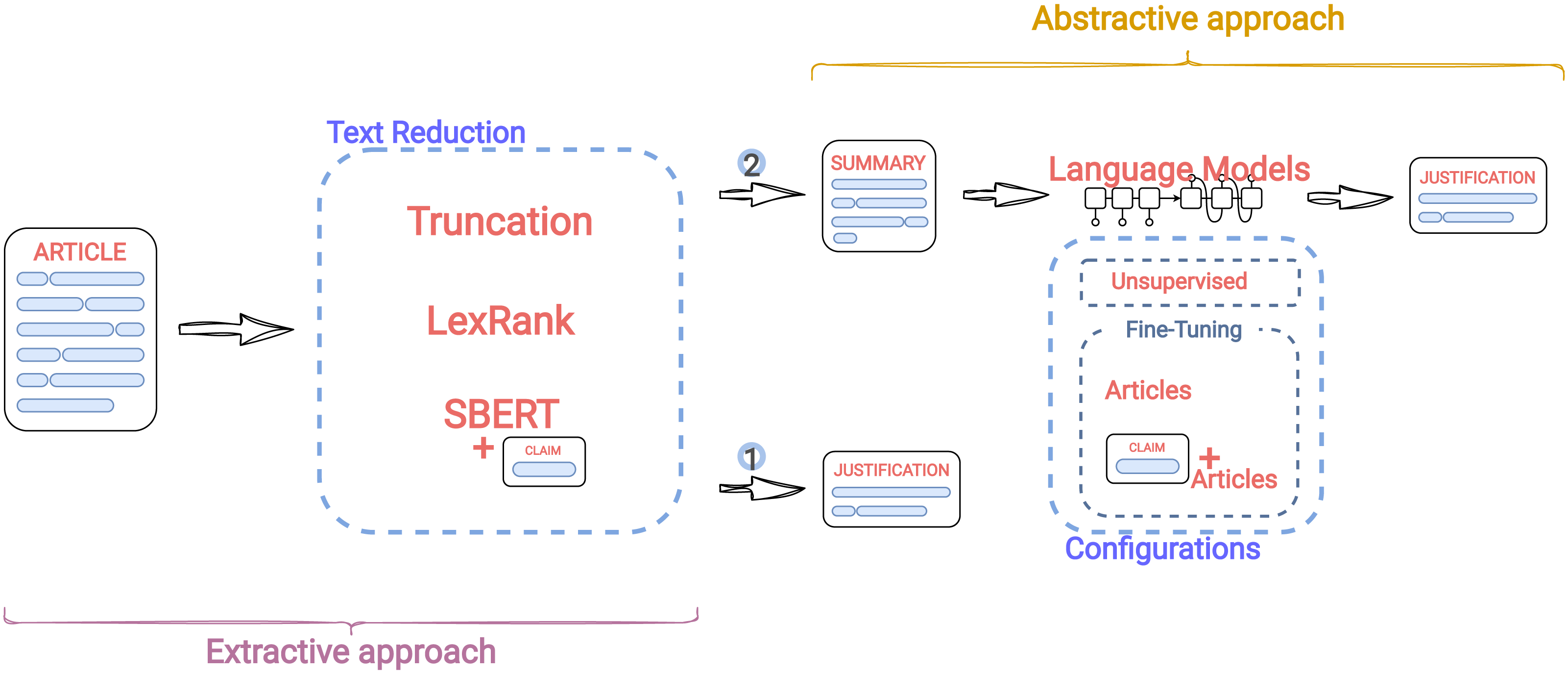

Fighting misinformation is a challenging, yet crucial, task. Despite the growing number of experts being involved in manual fact-checking, this activity is time-consuming and cannot keep up with the ever-increasing amount of fake news produced daily. Hence, automating this process is necessary to help curb misinformation. Thus far, researchers have mainly focused on claim veracity classification. In this paper, instead, we address the generation of justifications (textual explanation of why a claim is classified as either true or false) and benchmark it with novel datasets and advanced baselines. In particular, we focus on summarization approaches over unstructured knowledge (i.e., news articles) and we experiment with several extractive and abstractive strategies. We employed two datasets with different styles and structures, in order to assess the generalizability of our findings. Results show that in justification production summarization benefits from the claim information, and, in particular, that a claim-driven extractive step improves abstractive summarization performances. Finally, we show that although cross-dataset experiments suffer from performance degradation, a unique model trained on a combination of the two datasets is able to retain style information in an efficient manner.

@article{russo-etal-2023-benchmarking, title = {Benchmarking the Generation of Fact Checking Explanations}, author = {Russo, Daniel and Tekiro{\u{g}}lu, Serra Sinem and Guerini, Marco}, journal = {Transactions of the Association for Computational Linguistics}, volume = {11}, year = {2023}, address = {Cambridge, MA}, publisher = {MIT Press}, url = {https://aclanthology.org/2023.tacl-1.71}, doi = {10.1162/tacl_a_00601}, pages = {1250--1264}, } - Countering Misinformation via Emotional Response GenerationDaniel Russo, Shane Kaszefski-Yaschuk, Jacopo Staiano, and Marco GueriniIn Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing , Dec 2023

The proliferation of misinformation on social media platforms (SMPs) poses a significant danger to public health, social cohesion and ultimately democracy. Previous research has shown how social correction can be an effective way to curb misinformation, by engaging directly in a constructive dialogue with users who spread – often in good faith – misleading messages. Although professional fact-checkers are crucial to debunking viral claims, they usually do not engage in conversations on social media. Thereby, significant effort has been made to automate the use of fact-checker material in social correction; however, no previous work has tried to integrate it with the style and pragmatics that are commonly employed in social media communication. To fill this gap, we present VerMouth, the first large-scale dataset comprising roughly 12 thousand claim-response pairs (linked to debunking articles), accounting for both SMP-style and basic emotions, two factors which have a significant role in misinformation credibility and spreading. To collect this dataset we used a technique based on an author-reviewer pipeline, which efficiently combines LLMs and human annotators to obtain high-quality data. We also provide comprehensive experiments showing how models trained on our proposed dataset have significant improvements in terms of output quality and generalization capabilities.

@inproceedings{russo-etal-2023-countering, title = {Countering Misinformation via Emotional Response Generation}, author = {Russo, Daniel and Kaszefski-Yaschuk, Shane and Staiano, Jacopo and Guerini, Marco}, editor = {Bouamor, Houda and Pino, Juan and Bali, Kalika}, booktitle = {Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing}, month = dec, year = {2023}, address = {Singapore}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2023.emnlp-main.703}, doi = {10.18653/v1/2023.emnlp-main.703}, pages = {11476--11492}, }